CONFIA: Towards the development of trustworthy AI

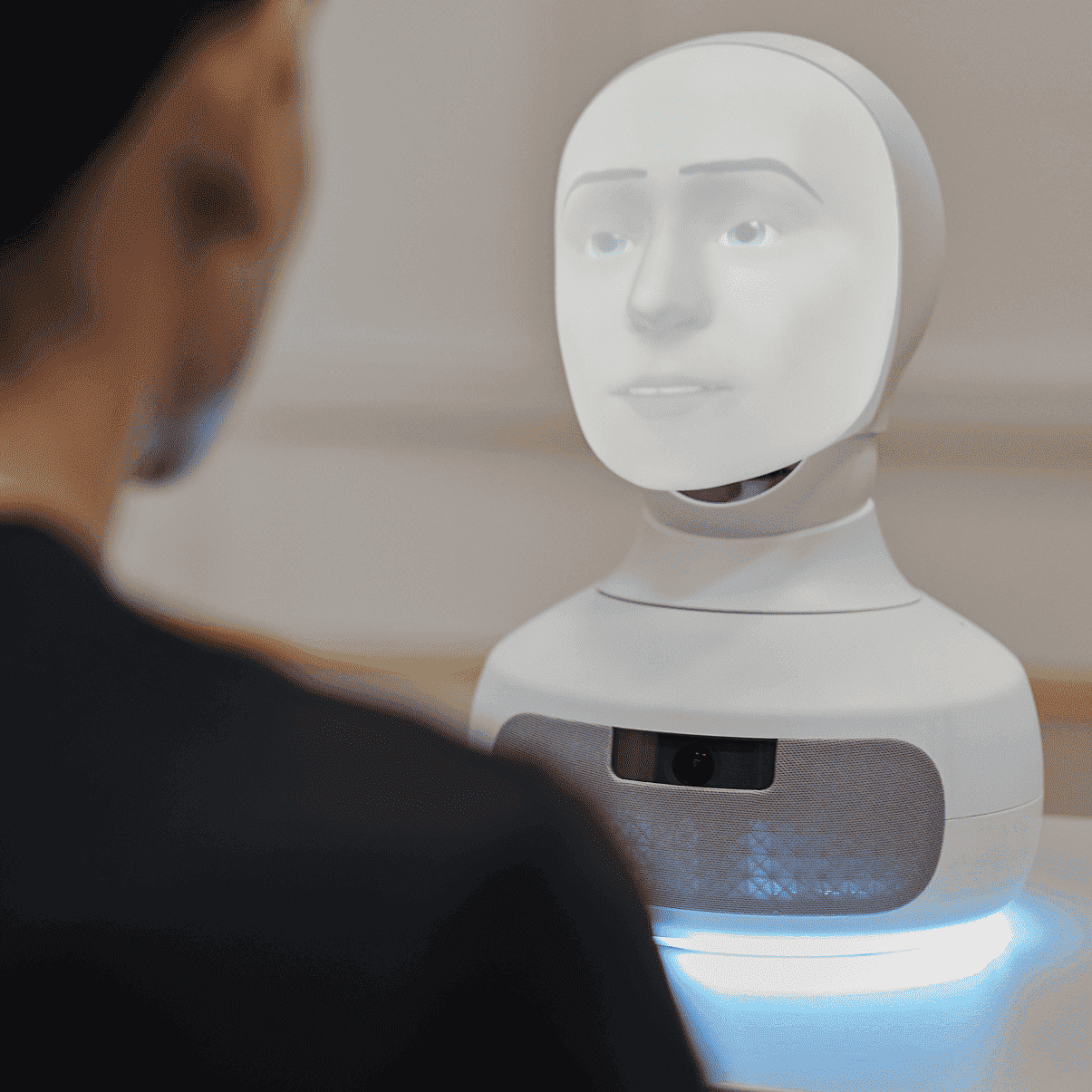

Trust is one of the fundamental elements in the development of a society. In our daily interactions with other people, we tend to judge them, albeit unconsciously, based on the trust they convey in us. It's not simply a matter of whether we believe they have a good reputation, or whether they are more or less intelligent, or whether we consider them good or bad people, but rather a set of cognitive, behavioral, and emotional characteristics that convey trust. For example, if a person frequently makes unfounded statements, we tend to think their judgment and opinion are unreliable and grant them less and less credibility. Trust in Artificial Intelligence (AI) is even a more complex process. The main research question addressed in this project is: how can we measure and increase trust in AI-enabled machines? Thus, the main objective of the project is to obtain empirical evidence on how humans attribute trust to AI-based systems in the field of financial education, a topic of great relevance to financial institutions.

Objectives

In the CONFIA project, we are going to:

1. Identify the elements involved in generating trust between humans and AI.

2. Develop a methodology that allows for qualitative and quantitative measurement of user trust in an AI-powered system.

3. Conduct a pilot study to empirically validate the theoretical framework and proposed methodology.

Project

/research/projects/cara-ao-desenvolvemento-dunha-ia-fiable

<p>Trust is one of the fundamental elements in the development of a society. In our daily interactions with other people, we tend to judge them, albeit unconsciously, based on the trust they convey in us. It's not simply a matter of whether we believe they have a good reputation, or whether they are more or less intelligent, or whether we consider them good or bad people, but rather a set of cognitive, behavioral, and emotional characteristics that convey trust. For example, if a person frequently makes unfounded statements, we tend to think their judgment and opinion are unreliable and grant them less and less credibility. Trust in Artificial Intelligence (AI) is even a more complex process. The main research question addressed in this project is: how can we measure and increase trust in AI-enabled machines? Thus, the main objective of the project is to obtain empirical evidence on how humans attribute trust to AI-based systems in the field of financial education, a topic of great relevance to financial institutions.</p><p>In the CONFIA project, we are going to:</p><p>1. Identify the elements involved in generating trust between humans and AI.</p><p>2. Develop a methodology that allows for qualitative and quantitative measurement of user trust in an AI-powered system.</p><p>3. Conduct a pilot study to empirically validate the theoretical framework and proposed methodology. </p> - José María Alonso Moral - Senén Barro Ameneiro, Alberto José Bugarín Diz

projects_en