CMOS Vision Sensors, Power Management, and Object Tracking on Embedded GPUs

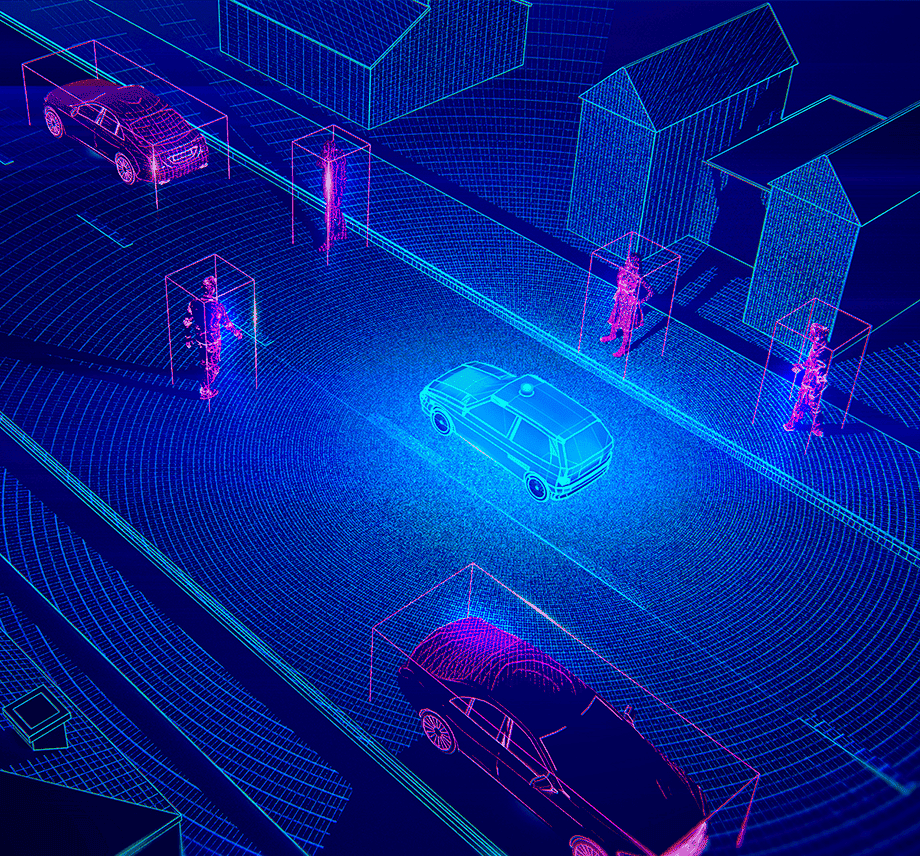

Our subproject tackles sensing and processing on the edge, where power, area and size are constrained, through enabling vision technologies for intelligent transportation.

In the sensing layer we aim at the aggregated local flow descriptor (ALFD) which gives a sparse and enriched representation of the objects on the scene for further processing tasks like multi-object tracking. In fact, ALFD is the core of the top-ranked multi-target tracker NOMT in the Multiple Object Tracking (MOT) challenge, which guarantees high accuracy. The goal is to implement ALFD on a low Size, Weight and Power (SWaP) CMOS vision sensor, which would ease their deployment in infrastructures along the road without the need of power mains, or in a battery-powered system on-board of UAVs. As apparent, throughout the design process, many scientific challenges arise in related topics like the design of reliable and long-term memories, or the reuse of processing blocks for different steps throughout the

algorithm to save both area and power consumption.

We also go beyond low power design techniques through energy harvesting in the quest of self-powered CMOS vision sensors. Our previous experience on energy harvesting has led us to the design of an on-chip power management unit (PMU) with only one unregulated output voltage in the nW- uW power range, which will be the starting point to a new PMU with multiple regulated output voltage levels with nW- mW range compliance to increase the range of applications from implantable to IoT devices for traffic monitoring. A PMU with different output voltage levels permits to work in a voltage scaling domain with a low voltage level when the chip is in sleep mode, and a higher voltage level when processing to provide more accuracy. Also, we aim at a dual mode chip in CMOS Imaging Technology (CIS) with

energy harvesting and background subtraction for tracking as a starting point using the PMU outlined above.

As a final point, our project goes through the application layer focusing on tasks where we have shown experience through recent transfer projects, namely, object detection through deep learning (DL) and multi-target tracking. Such tasks make up the core of applications like

vehicle counting or round about monitoring. These applications and the availability of real-life data sets from our ongoing transfer projects give us the specifications for hardware design. We aim at embedded GPUs as the supporting hardware, which usually leads to a faster

design cycle than that of their ASIC counterpart, and thus faster flexibility to adapt to the rapid pace of new DL algorithms. Our final goal is to run state-of-the-art algorithms, which guarantees high accuracy, on battery operated top GPUs for their easy deployment for traffic

monitoring, either on fixed posts along the road, or on moving platforms as vehicles or UAVs. In so doing, issues like a redesign of DL algorithms through pruning or bit width reduction, will help meet the scientific challenge of limited memory resources of embedded GPUs.

In summary, this project focuses on sensing and processing on the edge, where usually size, weight and power are constrained, which calls for low power solutions without degrading severely accuracy and speed. This has led us to CMOS vision sensors with power management techniques and embedded low power GPUs. Finally, we focus on object detection and tracking as we think these tasks are the core of many applications for traffic monitoring.

Project

/research/projects/sensores-cmos-de-vision-xestion-de-enerxia-e-seguimento-de-obxectos-sobre-gpus-empotradas

<p>Our subproject tackles sensing and processing on the edge, where power, area and size are constrained, through enabling vision technologies for intelligent transportation.<br />In the sensing layer we aim at the aggregated local flow descriptor (ALFD) which gives a sparse and enriched representation of the objects on the scene for further processing tasks like multi-object tracking. In fact, ALFD is the core of the top-ranked multi-target tracker NOMT in the Multiple Object Tracking (MOT) challenge, which guarantees high accuracy. The goal is to implement ALFD on a low Size, Weight and Power (SWaP) CMOS vision sensor, which would ease their deployment in infrastructures along the road without the need of power mains, or in a battery-powered system on-board of UAVs. As apparent, throughout the design process, many scientific challenges arise in related topics like the design of reliable and long-term memories, or the reuse of processing blocks for different steps throughout the<br />algorithm to save both area and power consumption.<br />We also go beyond low power design techniques through energy harvesting in the quest of self-powered CMOS vision sensors. Our previous experience on energy harvesting has led us to the design of an on-chip power management unit (PMU) with only one unregulated output voltage in the nW- uW power range, which will be the starting point to a new PMU with multiple regulated output voltage levels with nW- mW range compliance to increase the range of applications from implantable to IoT devices for traffic monitoring. A PMU with different output voltage levels permits to work in a voltage scaling domain with a low voltage level when the chip is in sleep mode, and a higher voltage level when processing to provide more accuracy. Also, we aim at a dual mode chip in CMOS Imaging Technology (CIS) with<br />energy harvesting and background subtraction for tracking as a starting point using the PMU outlined above.<br />As a final point, our project goes through the application layer focusing on tasks where we have shown experience through recent transfer projects, namely, object detection through deep learning (DL) and multi-target tracking. Such tasks make up the core of applications like<br />vehicle counting or round about monitoring. These applications and the availability of real-life data sets from our ongoing transfer projects give us the specifications for hardware design. We aim at embedded GPUs as the supporting hardware, which usually leads to a faster<br />design cycle than that of their ASIC counterpart, and thus faster flexibility to adapt to the rapid pace of new DL algorithms. Our final goal is to run state-of-the-art algorithms, which guarantees high accuracy, on battery operated top GPUs for their easy deployment for traffic<br />monitoring, either on fixed posts along the road, or on moving platforms as vehicles or UAVs. In so doing, issues like a redesign of DL algorithms through pruning or bit width reduction, will help meet the scientific challenge of limited memory resources of embedded GPUs.<br />In summary, this project focuses on sensing and processing on the edge, where usually size, weight and power are constrained, which calls for low power solutions without degrading severely accuracy and speed. This has led us to CMOS vision sensors with power management techniques and embedded low power GPUs. Finally, we focus on object detection and tracking as we think these tasks are the core of many applications for traffic monitoring.</p> - Víctor Manuel Brea Sánchez, Paula López Martínez - Diego Cabello Ferrer

projects_en